The first step is to make sure you are using Unisphere and are on Flare 30. Without flare 30 none of these steps are possible.

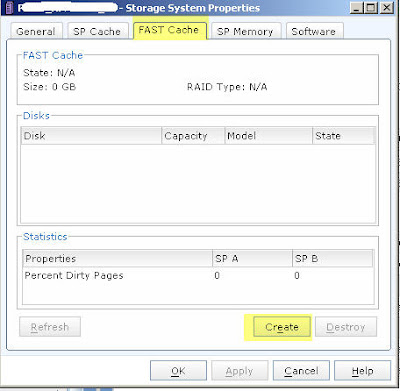

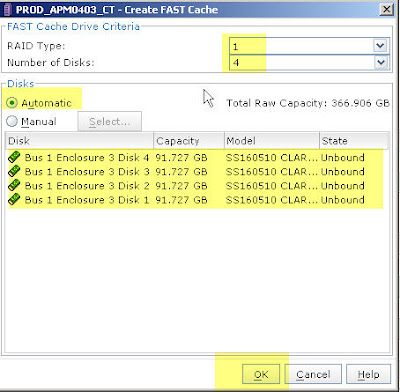

Then connect your EFD disks, for me it was 5 100 gig flash disks, which will build 2 raid 1 mirrors and 1 hotspare providing us 200 gigs of fast cache.

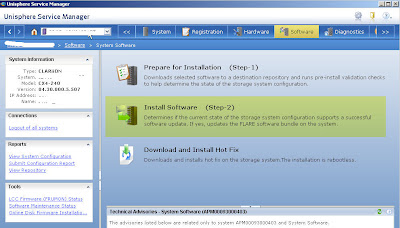

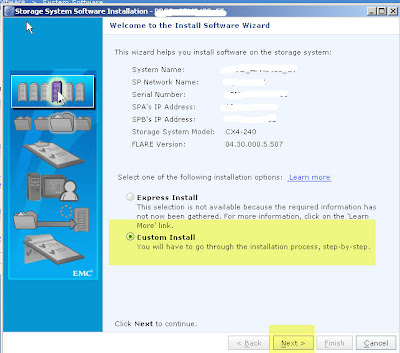

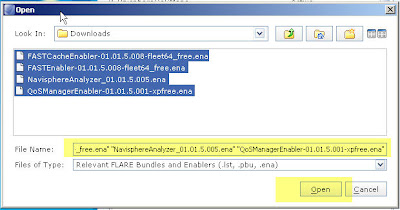

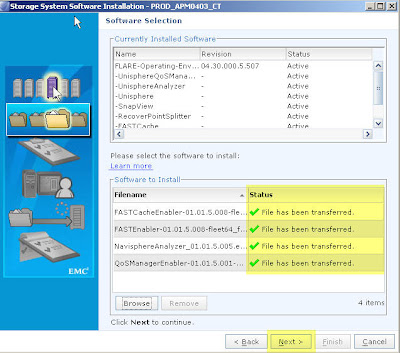

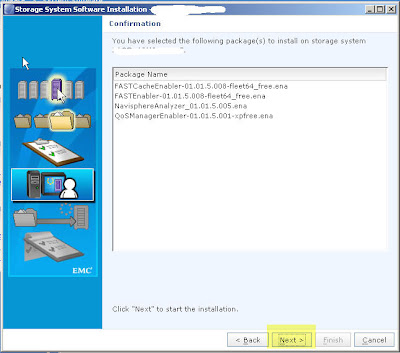

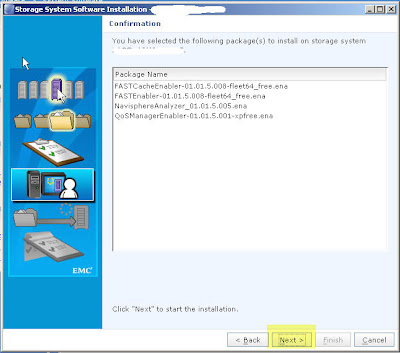

Once your fast software arrives you will receive it on cds. Each cd contains a .ena file which you will need to copy from the cd into a folder on the computer that you will be using unisphere service manager. My default location that I had to copy the software to was C:\EMC\repository\Downloads

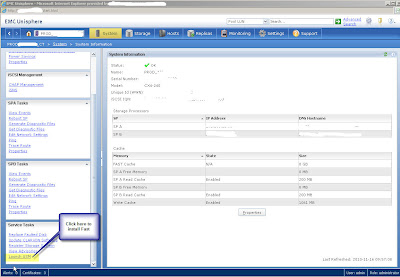

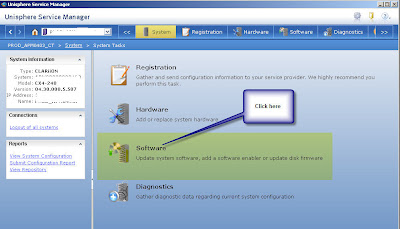

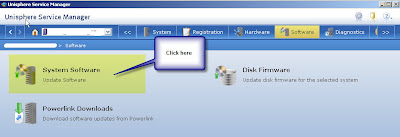

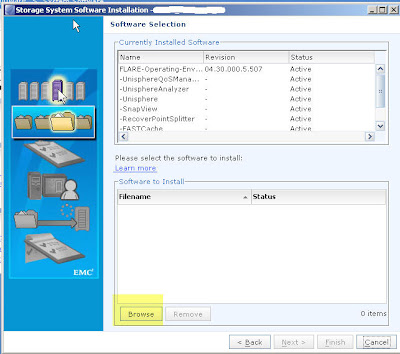

Then from inside Unisphere click on Launch USM under Service Tasks

emc support told me to just select all 4 disk as raid 1, and behind the scenes it will create 2 raid 1 mirrors.

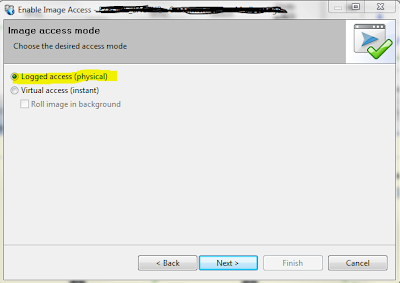

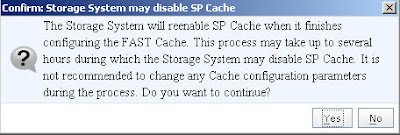

this next screen may give you a scare, it did for me and I called support. It should only disable SP cache for a few seconds/minutes as it rebuilds the memory map on the ram to include the SSD disks. For me it only took about 2 minutes in total and didn't appear to impact performance.

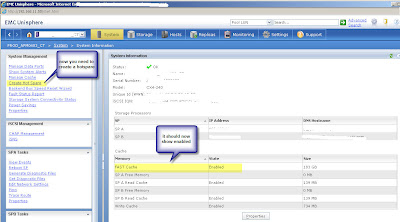

Now you should see that it is enabled, and you also need to assign a hotspare

select manual and select the SSD disk as the hotspare

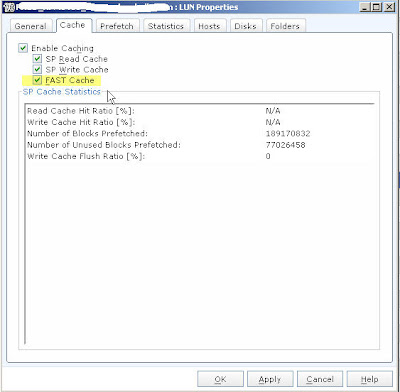

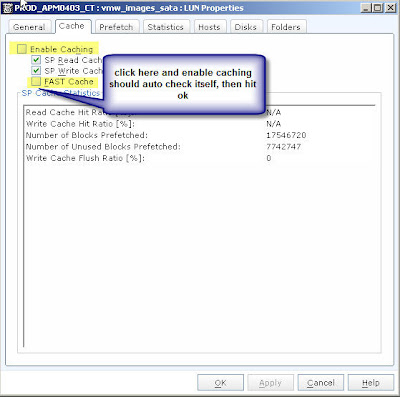

now go to the properties of the LUN that you want to enable fast cache on, and check fast cache, the enable caching should also automatically check itself off, hit apply and sit back and let fast cache do the work for you.

You can use navi analyzer to view Fast cache statistics to ensure that it is working properly.